- A recent incident where Elon Musk shared an AI-generated deepfake video of Kamala Harris on Twitter highlights the dangers of AI in spreading misinformation in electoral politics.

- AI technology can create convincing false information, as seen globally, such as in the 2023 Slovakian elections, raising concerns about its impact on democratic processes and the responsibility of individuals and platforms to curb misinformation.

- Efforts to address AI-driven misinformation include state regulations and platform policies, but rapid technological advancements necessitate continuous development of strategies for transparency, public awareness, and robust legislative frameworks.

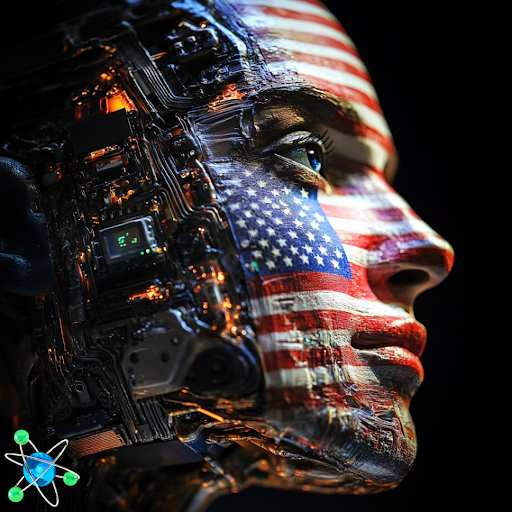

As the integration of artificial intelligence (AI) into everyday life becomes increasingly prevalent, concerns about its impact on politics and social media are mounting. One recent example highlights the potential dangers of AI-generated content in the realm of electoral politics.

In a controversial incident, Elon Musk shared a video on Twitter featuring an AI-generated deepfake of Democratic candidate Kamala Harris. The video, which included a synthetic voice purporting to be Harris, claimed she only achieved her position due to Joe Biden’s age and alleged she was merely a “diversity hire” with insufficient knowledge about running a country. While the video’s creator disclosed at the outset that it was AI-generated, Musk’s reposting of it, accompanied only by a laughing emoji and praise, sparked significant backlash. Critics argue that Musk’s actions could have misled viewers and potentially violated Twitter’s policies against sharing synthetic or manipulated media.

This incident underscores a broader issue with AI technology: its capacity to create highly convincing yet false information that can mislead and deceive the public. Musk’s large following amplified the reach of the AI-generated video, raising concerns about the responsibility of high-profile individuals and platforms in curbing misinformation. Although Musk later clarified that the video was AI-generated, the initial dissemination of such content can have lasting effects, influencing public perception and potentially swaying opinions based on false premises.

Globally, the misuse of AI in politics has already had repercussions. For instance, in the 2023 Slovakian elections, fake audio clips impersonated a candidate making false claims about election rigging and price hikes. This incident exemplifies the risks associated with AI-driven misinformation and the challenges faced by democratic processes.

In response, some states in the U.S. have begun to craft their own regulations to address the gap between AI technology and political discourse. Meanwhile, social media platforms like YouTube have implemented policies requiring users to disclose the use of AI in creating content. Despite these measures, the rapid pace of technological advancement often outstrips regulatory efforts, leaving gaps that can be exploited.

As AI technology continues to evolve, so too must our strategies for managing its impact. Ensuring transparency, enhancing public awareness, and developing robust legislative frameworks are crucial steps in mitigating the risks associated with AI-generated misinformation. The challenge lies in balancing the benefits of AI innovation with the imperative to protect the integrity of democratic processes and the accuracy of information consumed by the public.